For the Computer Graphics course at ETH Zurich, we were given a very minimalistic ray tracer in C++ called Nori and extended it step-by-step in assignments. For our final project, we had the freedom to choose from a diverse set of advanced features and implement them however we saw fit. I implemented the following features:

- Texture Mapping

- Procedural Textures

- Normal Mapping

- Different Emitters: Environment Map Emitters, Textured Area Emitters, Spotlights, Pointlights

- Realistic Camera Models

In the following, I’ll briefly describe two of the more interesting features, namely environment map emitters and realistic camera models, and showcase our final rendering, which earned us 3rd place at the 2023 Rendering Competition organized by ETH Zurich and Disney Research. For a deeper dive into the implementation details and correctness validation, feel free to check out the full project report.

Realistic Camera Models#

Many rendering techniques use clever tricks to simulate photographic effects like depth of field, bokeh, and vignetting. But with ray tracing, we can simulate an actual lens system and get all these effects naturally, without any fakes or shortcuts! The main challenge? Efficient ray sampling. A naïve approach would lead to unbearably long render times, so optimizing my sampling strategy was crucial to keep render times in the realm of seconds or minutes instead of hours.

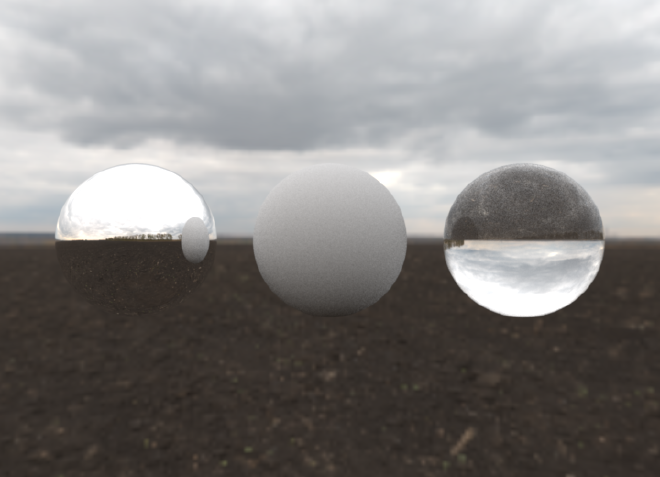

Environment Map Emitters#

An environment map emitter consists of a texture surrounding the entire scene, acting as an infinitely distant light source. This technique is an elegant way to create rich, immersive lighting and surroundings without the computational cost of complex geometry. Similarly to realistic camera models, the most involved part was supporting good importance sampling to reduce render times.

Final Rendering#

The theme of our rendering competition was “The more you look”, which immediately reminded us of M.C. Escher’s famous relativity scene, as one has to surely look multiple times to understand what is going on. We worked in teams of two on the same renderer, with my friend focusing on a distinct set of features, such as heterogeneous participating media. Here is the result: